ChatGPT destroys critical thinking

Neuroscientists have figured out what happens to people’s brains when artificial intelligence is constantly used when working with texts.

Active users of chatbots run the risk of learning to analyze information and think critically on their own, experts in artificial intelligence from the Massachusetts Institute of Technology wrote in their recent article. According to the published data, this was found out during experiments involving 54 volunteers.

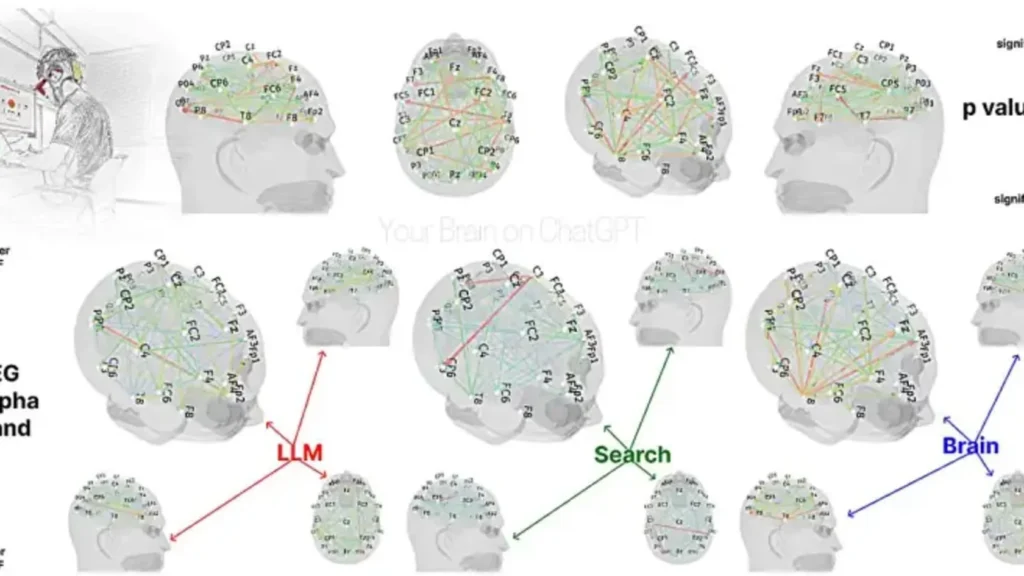

They were asked to write an essay on the same topic for 20 minutes and were divided into three groups: one of them performed the task exclusively “with their own mind” without any help from digital technologies, another used search engines, and the third “composed” together with ChatGPT.

Each participant’s brain activity was monitored during the activity using electroencephalography. As one would expect, the “brain only” group showed the most intense mental activity and involvement in the task at hand. Its representatives most of all engaged those brain areas responsible for memory and planning. This resulted in more original and deeply developed texts.

The ChatGPT group showed a striking contrast – a relatively low overall degree of brain engagement. It turned out that due to the use of chatbots, the authors of the essays thought about the topic rather poorly and demonstrated underdeveloped critical thinking: they “by default” perceived what was written by the chatbot as correct and did not realize that the neural network provides information “biased”, i.e. based on the preferences of a particular user.

Moreover, these participants did not even remember their own essays: when they were asked a few days later to quote or at least remember the essence of their reasoning in the text, they were not very good at it. It is reported that they did not quite feel like “authors”, “masters” of their own essays.

Interestingly, to complete the picture, some of the test subjects were later swapped: those who composed with the help of ChatGPT were forced to work independently, and vice versa. It turned out that after chatbots, people are reluctant to strain their brains, and the same task is worse for them than for those who are used to doing it on their own. The opposite tendency was also observed: those who initially wrote by themselves were not in a hurry to relax when the opportunity to delegate part of the work to a robot arose.

Those who used search engines were something in between the other two groups: they were not as good at “working with their heads” as the fully independent ones, but they were not as lazy as ChatGPT users.