Apple unveiled Matrix3D, a neural network for photogrammetry

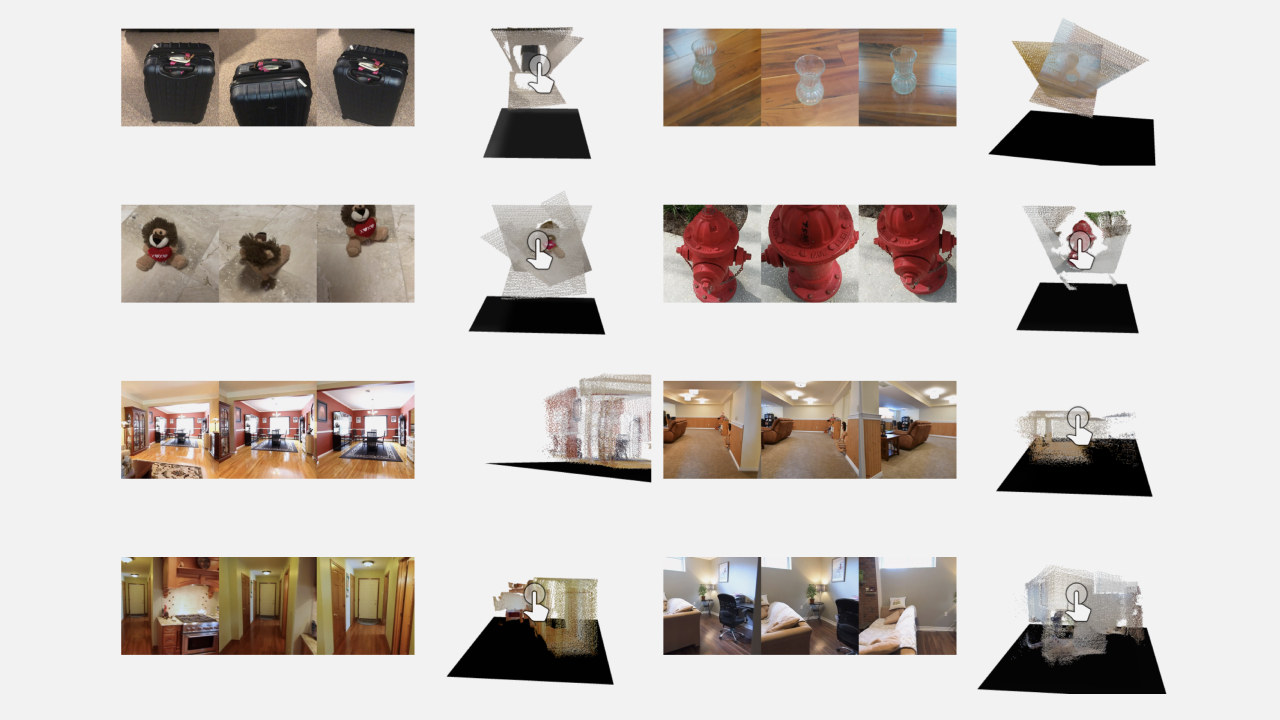

Apple investigators unveiled Matrix3D — a widespread neural network for photogrammetry. With its aid, users are able to transform clusters of object captures into comprehensive 3D-representations. The firm released weights and thoroughly described the method’s enactment.

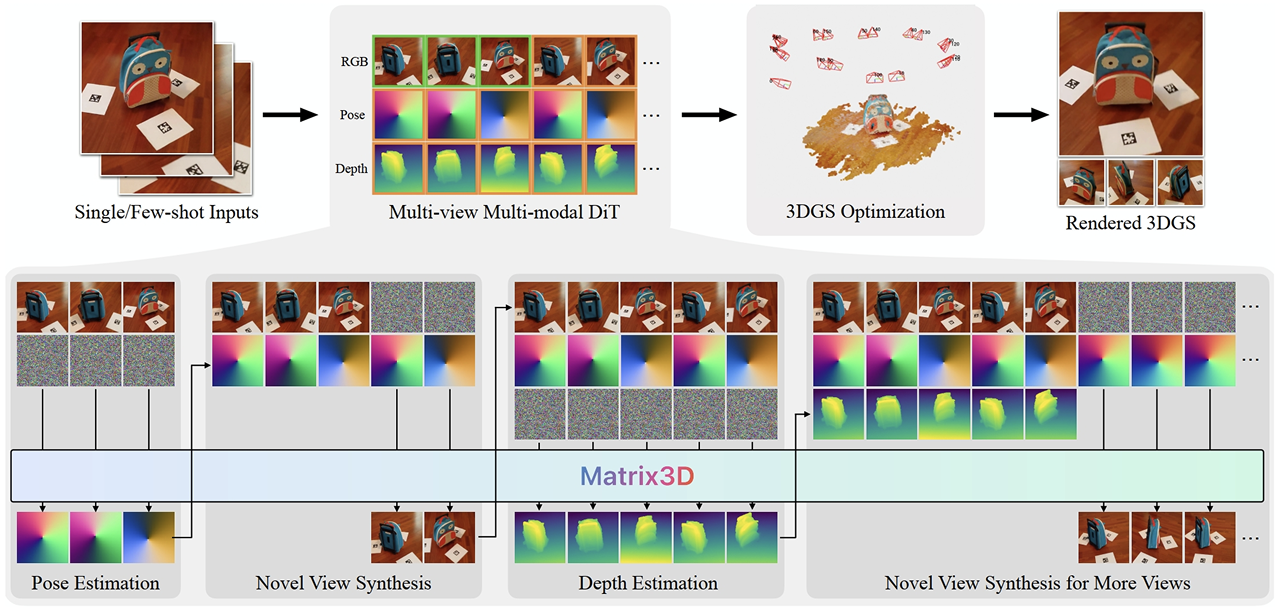

Matrix3D — a unified pattern, which executes numerous tasks immediately: assessment of camera location, viewpoint origination and frame depth forecasting. All this is ensured by a multimodal diffusion transformer. As a result, it is possible to simplify the pipeline, getting rid of linking multiple patterns, and enhance origination precision.

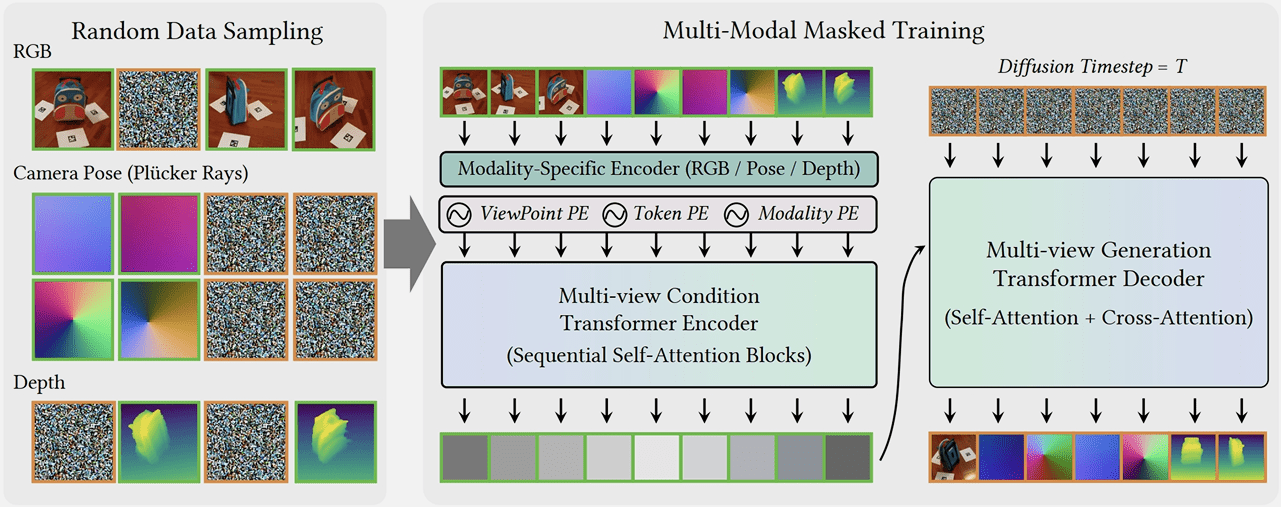

The neural network was trained using masking (Masked Autoencoder, MAE): patterns are transmitted with image-viewpoint pairs with partially hidden information, and it attempts to complete the missing components. Subsequently, this enables effectively generating missing viewpoints and utilizing information from physical sensors for origination. Likewise, the masking training method aids Matrix3D in forecasting object depth with just three frames.

Investigators released the code and pattern weights. There is a guide to launching Matrix3D in the repository. Developers note that they tested the neural network in Ubuntu 20.04 with PyTorch 2.4 and Python 3.10. It is recommended to recreate the environment with all dependencies for launch, but CUDA will be needed for some.